Listicles Drop 37%, Reviews Jump 637%: Branded vs Non-Branded Prompt Study

Key Takeaways:

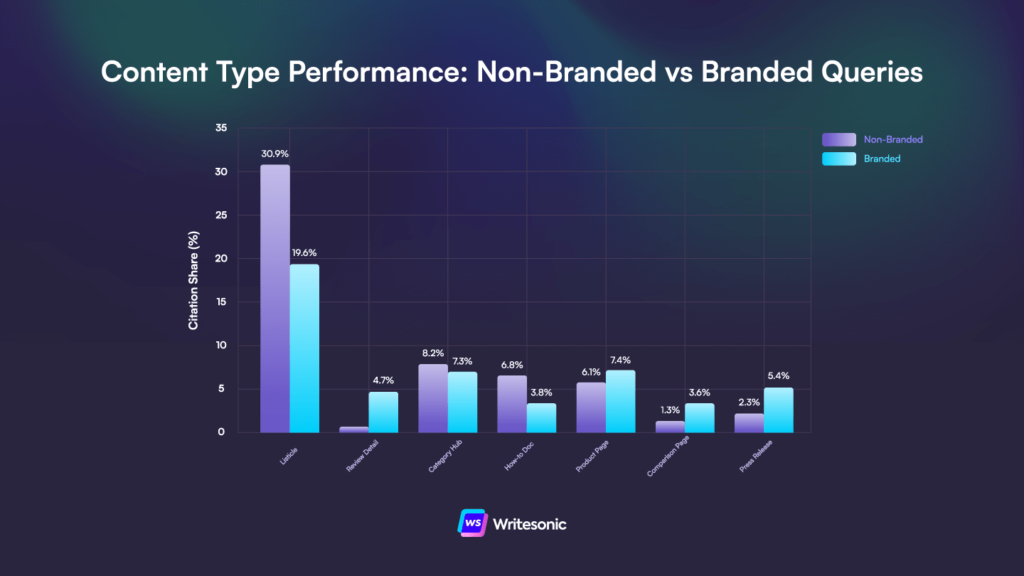

- Listicles lose a third of their citation share when users search branded terms (30.92% → 19.56%), while review content jumps 637%. Product pages see modest gains across all platforms (1.2x) but press releases double their share (2.3x) and comparison pages nearly triple (2.7x).

- Pricing pages drop 25-96% in branded contexts, with Claude essentially abandoning them (0.72% → 0.03%). Meanwhile, user-generated content climbs 46%, with Copilot and Grok showing the strongest preference shifts toward community discussions.

- Claude cites press releases 3.4x more in branded queries than non-branded. ChatGPT and Claude both drop how-to documentation by ~57% when users mention specific brands. Every platform increases product page citations in branded contexts, but none prioritize pricing pages.

Another week, another peek under the LLM hood. Last time, I looked at platform citation behavior across industries to try and spot if there were any glaring differences. Today, I’m sharing findings on how citation patterns rearrange themselves when users mention specific brand names instead of searching generic categories in their prompts.

So, does “best AI search tools” pull the same content as “Writesonic alternatives”? Once again, we ran 280+ million citations across 8 platforms, splitting queries into branded (mentions a specific company) vs non-branded (generic category searches), to find out.

Finding #1: Listicles collapse in branded prompts

- Non-branded prompts: 30.92% of citations

- Branded prompts: 19.56% of citations

- Change: -36.7%

AI engines still surface listicles in branded contexts, but they go from being LLM darlings to losing a third of their dominance. Some platforms show steeper drops, e.g., Copilot goes from 32.6% to 15.4% (-52.8%).

In non-branded queries, listicles often dominate, likely because they’re the best format for “what are my options” style questions. But branded queries open up more competitive content types and other formats compete for the same evaluation intent, diluting listicle dominance from 31% to about 20%.

But if listicles drop, what replaces them?

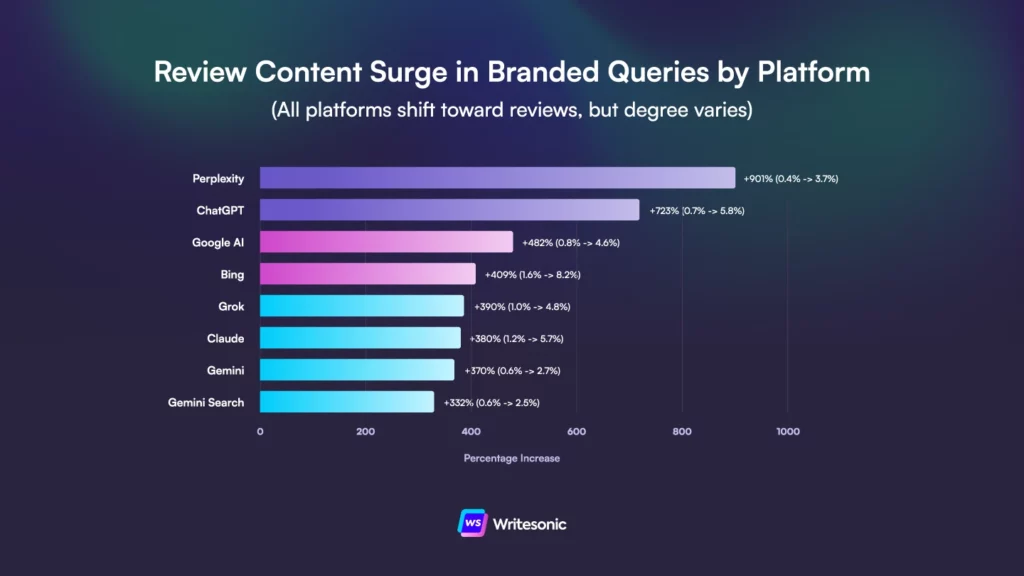

Finding #2: Reviews surge 7.4x (+637%)

- Non-branded: 0.64% of citations

- Branded: 4.71% of citations

- Lift: 7.4x (+637%)

While not an altogether surprising turn of events, it’s still interesting to see just how much expression review content gains in branded prompts. Reviews don’t replace listicles, but they do complement them. AI platforms likely surface both the listicle for context and the review for depth, with the former showing the landscape and the latter validating the choice.

Even Gemini, which barely touches reviews in non-branded queries (0.6%), bumps to 2.7% when users mention specific brands:

- Perplexity: 9.3x lift (0.4% → 3.7%)

- ChatGPT: 8.3x lift (0.7% → 5.8%)

- Google AI: 5.8x lift (0.8% → 4.6%)

- Copilot: 5.1x lift (1.6% → 8.2%)

- Grok: 4.9x lift (1.0% → 4.8%)

- Claude: 4.8x lift (1.2% → 5.7%)

- Gemini: 4.7x lift (0.6% → 2.7%)

- Gemini Search: 4.3x lift (0.6% → 2.5%)

Meanwhile, Perplexity shows the steepest preference shift, though it still ends up with lower absolute share than Copilot or ChatGPT, who prioritize review content the most.

Finding #3: Product pages get modest, universal lifts

- Non-branded: 6.07%

- Branded: 7.44%

- Lift: 1.2x (+22.5%)

Platform-specific lifts:

- Copilot: 1.6x (6.2% → 9.7%)

- Claude: 1.6x (5.4% → 8.4%)

- Gemini Search: 1.4x (6.3% → 8.8%)

- Gemini: 1.2x (4.7% → 5.8%)

- Google AI: 1.2x (7.2% → 8.3%)

- ChatGPT: 1.1x (6.4% → 7.2%)

- Perplexity: 1.1x (6.0% → 6.7%)

- Grok: 1.1x (8.1% → 8.9%)

Every platform increases product page citations in branded queries. Not dramatically (20-60% lifts) but consistently.

Which makes sense, as users searching “Writesonic features” or “Writesonic use cases” want the kind of authoritative information that product pages tend to provide.

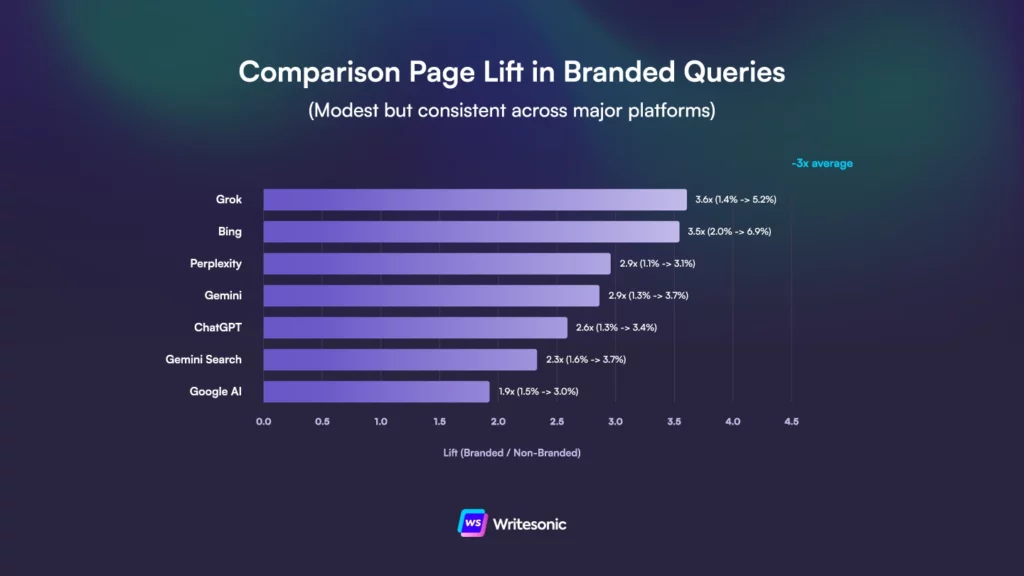

Finding #3: Comparison pages get a 2.7x lift

- Non-branded: 1.31%

- Branded: 3.60%

- Lift: 2.7x (+174%)

Platform-specific lifts:

- Grok: 3.6x (1.4% → 5.2%)

- Copilot: 3.5x (2.0% → 6.9%)

- Perplexity: 2.9x (1.1% → 3.1%)

- Gemini: 2.9x (1.3% → 3.7%)

- ChatGPT: 2.6x (1.3% → 3.4%)

- Gemini Search: 2.3x (1.6% → 3.7%)

- Google AI: 1.9x (1.5% → 3.0%)

The lifts are consistent across platforms (2-4x range), suggesting this is universal behavior rather than platform-specific quirks.

Finding #4: Press releases surge 2.3x

- Non-branded: 2.30%

- Branded: 5.39%

- Lift: 2.3x (+134%)

Platform-specific lifts:

- Claude: 3.4x (3.7% → 12.4%)

- Gemini Search: 2.7x (1.8% → 4.7%)

- Perplexity: 2.6x (1.6% → 4.3%)

- Gemini: 2.4x (1.6% → 4.0%)

- Copilot: 2.3x (2.2% → 5.0%)

- Google AI: 2.2x (2.2% → 4.9%)

- ChatGPT: 2.2x (3.2% → 6.8%)

Claude’s 3.4x lift is the outlier here, but even the “lowest” platform (ChatGPT at 2.2x) more than doubles press release citations in branded queries.

This isn’t surprising if we think about how AI visibility works. Press releases generate earned media and third-party mentions, which is what LLMs use to understand what your company is and does. When users search your brand name, platforms pull from the collective internet narrative about you and press releases seed that narrative.

The 2.3x lift confirms what you probably already suspected: third-party validation is as important (or arguably more important) than owned content in AI search. Your product pages get a 1.2x lift in branded queries. Press releases from publications covering your launches get 2.3x.

If you’re already doing launches and feature announcements with PR distribution, this is validation that it’s working for AI visibility.

Finding #5: User-generated content climbs 1.5x

- Non-branded: 4.30%

- Branded: 6.25%

- Lift: 1.5x (+46%)

Platform-specific lifts:

- Copilot: 2.5x (1.8% → 4.5%)

- Google AI: 2.1x (3.3% → 6.9%)

- Grok: 1.9x (4.4% → 8.4%)

- Gemini: 1.8x (3.2% → 5.6%)

- ChatGPT: 1.4x (4.3% → 6.1%)

- Perplexity: 1.2x (6.8% → 8.4%)

Press releases represent one type of authority (media coverage, official announcements), but UGC represents another (actual users, real experiences).

When someone asks “Slack alternatives,” AI platforms will naturally pull Reddit threads and Quora answers where people discuss whether they actually like using Slack. LLMs know that community discussions provide ground truth that marketing pages can’t (or rather, won’t).

Finding #6: The counterintuitive pricing drop

- Claude: -95.7% (0.72% → 0.03%) — near total collapse

- Perplexity: -50.3% (0.55% → 0.27%)

- ChatGPT: -41.5% (0.79% → 0.46%)

- Grok: -35.8% (0.81% → 0.52%)

- Gemini Search: -34.9% (0.71% → 0.46%)

- Google AI: -34.3% (0.57% → 0.38%)

- Copilot: -32.8% (0.54% → 0.36%)

- Gemini: -25.2% (0.33% → 0.25%)

Claude’s behavior is the most extreme, as pricing pages drop from 0.72% to essentially nothing (0.03%). But every platform shows the same pattern: when users mention brand names, pricing pages get cited less.

It’s somewhat of a counterintuitive drop. You’d think branded queries would pull more pricing pages, but AI engines prefer other content (e.g., product pages, reviews or comparison pages) that might mention pricing but provide additional information and context.

This is part of a broader pattern, as LLMs don’t seem to like pricing pages much, period. Even in non-branded queries, they max out at 0.81% (Grok). In branded queries, they drop to 0.03-0.52% across platforms.

What this means for content strategy

The obvious takeaway is that you need different content for different funnel stages. The less obvious parts:

- Press releases matter more than expected. Their citation share doubles in branded contexts. If you’re launching products or announcing features, these get disproportionate attention when users search your brand.

- Pricing pages underperform. Platforms prefer product pages or reviews that mention pricing over dedicated pricing pages. Maybe because dedicated pricing pages lack context, or maybe platforms don’t trust them as much. Either way, don’t rely on pricing pages alone and make sure your price point is correctly reflected across other materials.

- Review content is mandatory for evaluation. The 7.4x lift is the strongest we measured. If you don’t have review content, you’re allowing competitors to own the narrative.

If you’re resource-constrained, prioritize reviews and press releases for branded queries. They show the strongest lifts and fill gaps that product pages can’t.

Platform-specific quirks

Most platforms follow the same patterns, but there are a few outliers:

- Claude’s press release obsession: 12.4% of branded citations go to press releases (3.4x lift). When users search company names on Claude, official announcements dominate more than any other platform.

- Copilot and Grok prefer UGC: 2.5x and 1.9x lifts respectively. When users ask about specific tools on these platforms, community discussions become significantly more relevant.

- ChatGPT and Claude drop how-to docs hardest: Both lose ~56-58% of how-to citations in branded contexts. These platforms clearly distinguish between “teach me how” (non-branded) and “help me choose” (branded).

- All platforms universally decrease pricing pages. Counterintuitive but consistent. When users mention brands, platforms prefer product pages and reviews over dedicated pricing pages.

Methodology: Branded queries = any search explicitly mentioning a company/product/service name. Non-branded = generic category searches. Analysis based on 282,828,738 citations from 8 AI platforms across 18 industries.