Monitor Retrieval-Augmented Generation (RAG) Systems with MLflow | by Venkata Popuri | Jan, 2025

Organizations have started jumping onto the bandwagon of Generative AI ever since ChatGPT was launched in November 2022. Investments in Generative AI are growing considerably year over year, and Gartner estimates that AI software will increase to $297 billion by 2027. Organizations are transforming their business by leveraging Generative AI and large language modes (LLMS) and have started harnessing Generative AI’s benefits, including generating new revenue streams, improving customer experience, reducing costs, etc.

This tutorial briefly describes how MLflow services including MLflow AI Gateway, MLflow Tracking Server and MLflow metrics can be used to monitor RAG based systems. Specifically, relevancy, and latency, the two most important performance metrics related to RAG systems were considered for evaluation in this tutorial. A note- this tutorial utilizes the sample notebook provided by MLflow documentation however, instead of leveraging LLM endpoints hosted on Databricks, I used a local MLflow AI gateway to configure endpoints for LLMs and leveraged the gateway’s APIs for accessing models for embeddings and completions

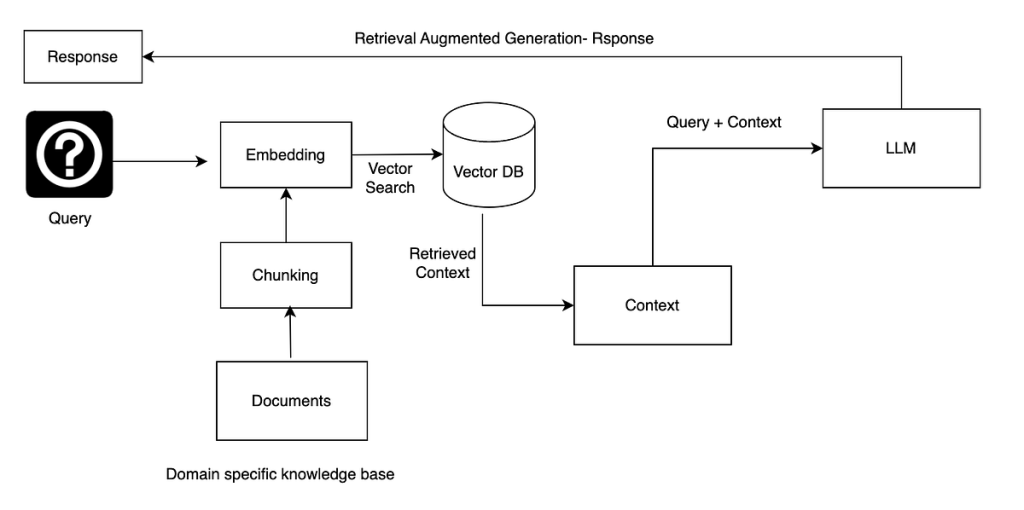

Before discussing the core objective of this tutorial, let’s understand RAG, its functionality, and the basic building blocks of an RAG application.

Retrieval Augmentation Generation contextualizes and adds business-specific content to Generative AI applications such as QnA chatbots, content generation, and product development. It is quintessential in Generative applications, and to ensure the success of RAG applications, they must be monitored for accuracy for providing accurate responses and latency for serving timely responses.

Retrieval

When a query is input, the system retrieves relevant information from external knowledge sources (e.g., databases, documents, or APIs). This retrieval is often powered by semantic or vector similarity search methods using tools like ElasticSearch, Pinecone, or FAISS.

Augmentation

The retrieved information is fed as context to a Generative AI model. This augmentation allows the model to generate more accurate, informed, and grounded responses in the retrieved data.

Generation

The Generative AI model, equipped with contextual data, generates a response that integrates the retrieved knowledge, producing a more precise and contextually relevant output.

RAG consists of various components, including loading data from knowledge base documents, both unstructured and structured data, text splitting, chunking, embedding, storing in vector databases and retrieving content, and LLMs like OpenAI, PaLM2, Llama2, Anthropic, etc.

Evaluate Chunking: Once all the domain-specific documents are loaded, they must be broken down into smaller segments before being embedded and stored in a vector database. This is called chunking. Chunking size must be optimized to ensure that the context in the corpus segment is preserved for relevancy and accuracy with minimal noise. The chunking size must be manageable, or else the accuracy of the semantic search results will be adversely impacted.

Different chunking methods include fixed-size chunking, content-aware chunking, recursive chunking, specialized chunking, and semantic chunking. Selecting a proper chunking strategy depends on the type of content, types of embedding models, such as sentence transformers and text-embedding-ada-002, length and complexity of user queries, and how results are retrieved and utilized for a specific RAG-based application.

Chunking can be evaluated using mlflow.evaluate function. For example, CHUNK_SIZE can be configured with different values before a segment of the text corpus is embedded, stored, retrieved, and evaluated using the mlflow.evaluate api for latency and relevancy of the RAG application

CHUNK_SIZE = 1000

list_of_documents = loader.load()

text_splitter = CharacterTextSplitter(chunk_size=CHUNK_SIZE, chunk_overlap=0)

docs = text_splitter.split_documents(list_of_documents)

retriever = Chroma.from_documents(docs, embedding_function).as_retriever()with mlflow.start_run() as run:

evaluate_results = mlflow.evaluate(

model=retriever_model_function,

data=eval_data,

model_type="retriever",

targets="source",

evaluators="default",

Evaluate Embedding: Embedding converts text tokens into high-dimensional vector spaces to preserve semantic contexts and relationships before storing them in a vector database. Documents can be embedded using different embedding algorithms such as sentence-transfer, text-embedding-ada-002, etc. Embedded vectors are stored and managed using vector index in vector databases.

In the code snippet below, different embeddings can be used to measure the relevancy and accuracy of an RAG application using mlflow.evaluate as above.

embedding_function = SentenceTransformerEmbeddings(model_name="all-MiniLM-L6-v2")

retriever = Chroma.from_documents(docs, embedding_function).as_retriever()

Evaluate Vector databases: Vector databases such as Chroma DB, Pinecone, Qdrant, Milvus, etc., can store and manage high-dimensional vectors created using embedding functions. These vectors can represent text, images, or audio. Vector databases enable highly performant and scalable semantic searches and retrieval capabilities.

To measure the effectiveness of a vector database specific to an RAG application, loaded documents can be stored in different databases to select the most appropriate vector database.

Monitoring all the above components is key to ensuring a successful RAG application. MLflow provides a wealth of metrics to evaluate and monitor RAG applications to evaluate Chunking, embedding, and Vector data stores. We will go over the metrics supplied by Mlflow in the following section.

To introduce RAG system evaluation using MLflow, two experiments were conducted, one with Chroma vector database and the second with Qdrant vector database. A set of 5 questions was used on each vector store and Mlflow metrics relevance and latency were captured and visualized using Mlflow tracking UI.

MLflow is an open-source platform that offers various services and libraries for deploying and monitoring AI/ML and Gen AI applications. This post will illustrate how LLMs can be deployed and monitored using the MLflow AI Gateway(Experimental). We will also cover how LLMs can be monitored using mlfow, and how Retrieval Augmentation Generation (RAG) related metrics, relevancy, and latency are monitored and reported. The building blocks of this illustration include the MLflow AI Gateway(Experimental), Azure OpenAI LLMs for embedding and content predictions, and vector databases, Chroma and Qdrant and Mlflow tracking server. We use Langchain to stitch all the components together.

MLflow AI Gateway is an experimental service. It provides a centralized configuration store for configuring various LLM providers, including OpenAI, Azure OpenAI, Anthropic, and their endpoints, such as chat, completion, embeddings, and API keys, as shown in the below image., etc. The MLflow AI Gateway(Experimental) eliminates the need to set up provider and API configurations in application code as it exposes these configuration settings through APIs. For a complete list of supported providers, please visit the MLflow documentation link.

The MLflow tracking server helps track LLM experiments. Once logging is enabled, it displays per-run metrics in tabular and graphical modes. The tracking UI can be used to compare runs and helps identify issues with runs. This post includes some useful graphs and tabular data from the Mlflow tracking UI. mlfow.evaluate function provides several metrics besides accuracy and relevancy. Please refer to Mlflow documentation on various metrics for LLM evaluation.

Note: You can download the code featured in the blog post from the gitrepo

Steps for configuring MLflow AI Gateway(Experimental) locally

- Install MLflow AI Gateway(Experimental) by running the command, pip install ‘mlflow[genai]’ in a terminal window. Please note MLflow AI Gateway is not supported on Windows platform.

- Create environmental variables. Examples: AZURE_OPENAI_API_KEY ,OPENAI_API_KEY, AZURE_OPENAI_ENDPOINT

- Set up configuration settings in config.yaml like the one given below

endpoints:

- name: chat

endpoint_type: llm/v1/chat

model:

provider: openai

name: gpt-4

config:

openai_api_type: "azure"

openai_api_key: $OPENAI_API_KEY

openai_deployment_name: "gpt-4"

openai_api_base: $AZURE_OPENAI_ENDPOINT

openai_api_version: "2023-05-15"- name: completions

endpoint_type: llm/v1/completions

model:

provider: openai

name: gpt-4

config:

openai_api_type: "azure"

openai_api_key: $AZURE_OPENAI_API_KEY

openai_deployment_name: "gpt-4"

openai_api_base: $AZURE_OPENAI_ENDPOINT

openai_api_version: "2023-05-15"

- name: embeddings

endpoint_type: llm/v1/embeddings

model:

provider: openai

name: text-embedding-ada-002

config:

openai_api_type: "azure"

openai_api_key: $AZURE_OPENAI_API_KEY

openai_deployment_name: "text-embedding-ada-002"

openai_api_base: $AZURE_OPENAI_ENDPOINT

openai_api_version: "2023-05-15"

Post configuration steps

- Start the MLflow AI Gateway using the command, mlflow gateway start — config-path config.yaml — port {port} — host {host} — workers {worker count} Port, host, and workers parameters are optional.

- Once the initial configuration file is set up in config.yaml, configurations can be accessed in Jupyter notebooks using APIs. Access completions endpoint configuration, llm = Mlflow(target_uri=”http://127.0.0.1:5000″, endpoint=”completions”)

- Start MLflow tracking server , mlflow server — host 127.0.0.1 — port 8080

- Verify MLflow AI. Gateway server is installed and running properly by browsing to http://127.0.0.0.1:5000 ( if default port is used, otherwise provide the port selected during installation)

- Verify MLflow Tracking server is installed and running properly by browsing http://127.0.0.1:8080 (if default port is used, otherwise provide the port selected during installation)

Sample Code

Now, let’s dive into the basic building blocks of the illustration.

!pip install -r requirements.txt

import os

import sys

import langchain

from openai import AzureOpenAI

from langchain.vectorstores import Chroma

import pandas as pd

import mlflow

import mlflow.deployments

from langchain.chains import RetrievalQA

from langchain.text_splitter import CharacterTextSplitter

from langchain.llms import Mlflow

from langchain.document_loaders import WebBaseLoader

from mlflow.metrics.genai import relevance

from langchain_openai import AzureOpenAIEmbeddings

from langchain_community.embeddings import MlflowEmbeddings

import qdrant_client

from qdrant_client.http import models

from qdrant_client.http.models import CollectionStatus

from langchain.vectorstores import Qdrant

from qdrant_client import QdrantClient

import langchain_qdrant

from langchain_qdrant import QdrantVectorStore

from qdrant_client.http.models import Distance, VectorParams

from langchain.chains.query_constructor.schema import AttributeInfo

from langchain.retrievers.self_query.base import SelfQueryRetriever

from langchain.retrievers.self_query.qdrant import QdrantTranslatorAZURE_OPENAI_API_KEY=os.environ.get('AZURE_OPENAI_API_KEY')

OPENAI_API_KEY=os.environ.get('OPENAI_API_KEY')

AZURE_OPENAI_ENDPOINT=os.environ.get('AZURE_OPENAI_ENDPOINT')

tracking_uri = "http://localhost:8080/"

mlflow.set_tracking_uri(tracking_uri)

llm = Mlflow(target_uri="http://127.0.0.1:5000", endpoint="completions")

ebedd_llm = Mlflow(target_uri="http://127.0.0.1:5000", endpoint="embeddings")

# Load Mlflow sample documents using Langchain WebBaseLoader and create a Retriever for querying Chroma vector store

loader = WebBaseLoader(

[

"https://mlflow.org/docs/latest/index.html",

"https://mlflow.org/docs/latest/tracking/autolog.html",

"https://mlflow.org/docs/latest/deep-learning/index.html",

"https://mlflow.org/docs/latest/getting-started/tracking-server-overview/index.html",

"https://mlflow.org/docs/latest/python_api/mlflow.deployments.html",

"https://mlflow.org/docs/latest/tracking/autolog.html"

]

)

documents = loader.load()

CHUNK_SIZE = 1000

text_splitter = CharacterTextSplitter(chunk_size=CHUNK_SIZE, chunk_overlap=0)

texts = text_splitter.split_documents(documents)

embeddings = MlflowEmbeddings(

target_uri="http://127.0.0.1:5000",

endpoint="embeddings",

)

docsearch = Chroma.from_documents(texts, embeddings)

qa = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=docsearch.as_retriever(fetch_k=3),

return_source_documents=True,)

# Create a dataframe to store a list of questions to evaluate on.

eval_df = pd.DataFrame(

{

"questions": [

"What is MLflow?",

"What is deep learning?",

"How to monitor models using mlflow?",

"Explain Mlflow auto logging feature",

"What is Deep learning",

],

}

)

# Initialize an in-memory Qdrant store, metadata for retriever for Langchain SelfQueryRetriver for querying Qdrant vector database

Qdrant_store = Qdrant.from_documents(

texts,

embeddings,

location=":memory:", # Local mode with in-memory storage only

collection_name="MLflow Collection",)

#source, title,language _id,_collection_name

from langchain.chains.query_constructor.schema import AttributeInfo

from langchain.retrievers.self_query.base import SelfQueryRetriever

from langchain.retrievers.self_query.qdrant import QdrantTranslator

from langchain_openai import OpenAI

metadata_field_info = [

AttributeInfo(

name="source",

description="Mlflow documentation link",

type="string",

),

AttributeInfo(

name="title",

description="Title of the web page",

type="string or list[string]",

),

AttributeInfo(

name="language",

description="Language of mlflow documentation",

type="string",

),

]

document_content_description = "Mlflow documentation repository"

#llm = OpenAI(temperature=0)

qdrant_retriever = SelfQueryRetriever.from_llm(

llm, Qdrant_store, document_content_description, metadata_field_info, structured_query_translator=QdrantTranslator(metadata_key="metadata"),verbose=True

)

#Create necessary functions to apply questions to Chroma and Qdrant retrievers for evaluation

def chroma_model(input_df):

return input_df["questions"].map(qa).tolist()

def qdrant_model(input_df):

#return input_df['questions'].map(qdrant.similarity_search).tolist()

return input_df['questions'].map(qdrant_retriever.invoke)

def mlflow_evaluate (vectordb,input_df):

relevance_metric = relevance()

if "chroma" in vectordb.lower():

with mlflow.start_run():

Chroma_results = mlflow.evaluate(

chroma_model,

data=eval_df,

#model_type="question-answering",

evaluators="default",

predictions="result",

extra_metrics=[relevance_metric, mlflow.metrics.latency()],

evaluator_config={

"col_mapping": {

"inputs": "questions",

"context": "result"

}

},

)

print(Chroma_results.metrics)

display(Chroma_results.tables["eval_results_table"])

print(f"See aggregated evaluation results below: \n\n")

df_c= pd.DataFrame(Chroma_results.metrics,[0])

return df_c

elif 'qdrant' in vectordb.lower():

with mlflow.start_run():

Qdrant_results = mlflow.evaluate(

qdrant_model,

data=eval_df,

#model_type="question-answering",

evaluators="default",

predictions="result",

extra_metrics=[relevance_metric, mlflow.metrics.latency()],

evaluator_config={

"col_mapping": {

"inputs": "questions",

"context": "result"

}

},

)

print(Qdrant_results.metrics)

display(Qdrant_results.tables["eval_results_table"])

print(f"See aggregated evaluation results below: \n\n")

df_q= pd.DataFrame(Qdrant_results.metrics,[0])

return df_q

#Experiment #1: Evaluate performance of the RAG system with Chroma Vector database

# Call the function created above to process questions using Chroma Vector Database

df_c= mlflow_evaluate('Chroma',eval_df)

#Experiment #2: Evaluate RAG system's relevance and latency with Qdrant Vector database

# Call the function to query Qdrant in-memory store

df_q= mlflow_evaluate('Qdrant',eval_df)

# Now let us plot the relevance and latency values from experiment 1 and 2 for comparison purpose

c_values= df_c[['latency/mean', 'relevance/v1/mean', 'relevance/v1/p90']].squeeze()

q_values= df_q[['latency/mean', 'relevance/v1/mean', 'relevance/v1/p90']].squeeze()

labels = ['latency/mean', 'relevance/v1/mean', 'relevance/v1/p90']

x = np.arange(len(labels)) # the label locations

width = 0.35 # the width of the bars

fig, ax = plt.subplots()

rects1 = ax.bar(x - width/2, c_values, width, label='Chroma')

rects2 = ax.bar(x + width/2, q_values, width, label='Qdrant')

# Add some text for labels, title and custom x-axis tick labels, etc.

ax.set_ylabel('Value')

ax.set_title('Comparison of relevance and latency metrics between Chroma and Qdrant')

ax.set_xticks(x, labels)

ax.legend()

ax.bar_label(rects1, padding=3)

ax.bar_label(rects2, padding=3)

fig.tight_layout()

plt.show()

Sources

1. Mlflow documentation

2. Langchain document, https://python.langchain.com/docs/how_to/self_query/

Access the tracking server using the URL http://127.0.0.1:8080 and select experiments from the above landing page to see details of experiments.

Compare runs

Once experimentation is complete, we can use mlflow tracking UI to compare latency, relevance, and other metrics across all experiments. The charts below show latency and relevance for these metrics across all runs, which MLflow UI created.

Note: The above comparison is for illustration purpose only and it is not to indicate that one vector database is better than the other.

We reviewed key concepts and components of RAG-based applications, why monitoring RAG applications is required for ensuring such applications are successfully deployed and monitored, and how RAG applications can be monitored using mlflow.evaluate using Mlflow monitoring counters and Mlflow tracking UI. Please note that the MLflow AI Gateway(Experimental) is an experimental component; please refer to the references sections for more information this post covers.

1. Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., Küttler, H., Lewis, M., Yih, W., Rocktäschel, T., Riedel, S., & Kiela, D. (2020, May 22). Retrieval-Augmented Generation for Knowledge-Intensive NLP tasks. arXiv.org. https://arxiv.org/abs/2005.11401#:~:text=arXiv:2005.11401%20(cs),View%20PDF

2. What is MLflow? (n.d.). https://www.mlflow.org/docs/2.7.1/what-is-mlflow.html#:~:text=MLflow%20is%20a%20versatile%2C%20expandable,%2C%20algorithm%2C%20or%20deployment%20tool

3. MLFlow AI Gateway (Experimental). (n.d.). https://mlflow.org/docs/latest/llms/deployments/index.html

4. MLFlow Tracking Server. (n.d.). https://mlflow.org/docs/latest/tracking/server.html4.

5. MLFlow LLM Evaluation. (n.d.). https://mlflow.org/docs/latest/llms/llm-evaluate/index.html